Synthesis of Realistic Example Face Images (SREFI)

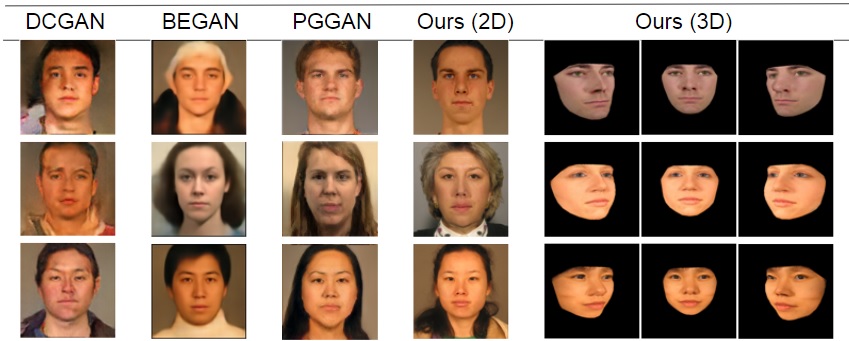

We propose a novel face synthesis approach that can generate an arbitrarily large number of synthetic images of both real and synthetic identities with different facial yaw, shape and resolution. Thus a face image dataset can be expanded in terms of the number of identities represented and the number of images per identity using this approach, without the identity-labeling and privacy complications that come from downloading images from the web. The synthesized images can be used to augment datasets to train CNNs or as massive distractor sets for biometric verification experiments without any privacy concerns. Additionally, law enforcement can make use of this technique to train forensic experts to recognize faces. Our method samples face components from a pool of multiple face images of real identities to generate the synthetic texture. Then, a real 3D head model compatible to the generated texture is used to render it under different facial yaw transformations. We perform multiple quantitative experiments to assess the effectiveness of our synthesis procedure in CNN training and its potential use to generate distractor face images. Additionally, we compare our method with popular GAN models in terms of visual quality and execution time. We are working on building a GAN model to hallucinate realistic forehead, head, bust and background on to our synthetic 3D face images, learned using regularized autoencoders.

Sandipan Banerjee, John Bernhard, Walter Scheirer, Kevin Bowyer, Patrick Flynn

SREFI: Synthesis of Realistic Example Face Images, Sandipan Banerjee, John S. Bernhard, Walter J. Scheirer, Kevin Bowyer, Patrick Flynn, Proceedings of the IAPR/IEEE International Joint Conference on Biometrics (IJCB), October 2017: [arxiv] [pdf]

[poster]