Evaluation of Machine Learning Model Generalization

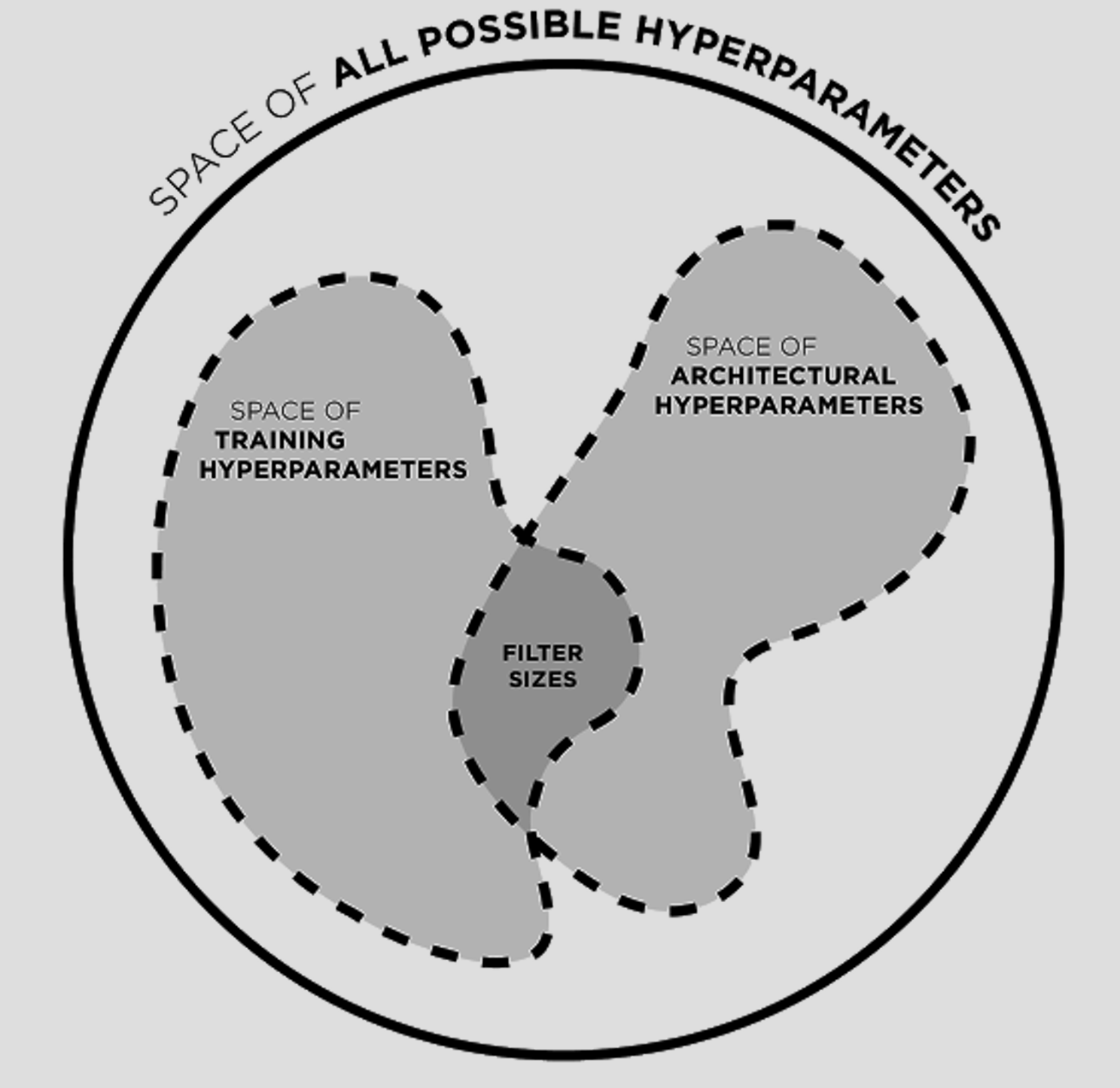

Achieving a good measure of model generalization remains a challenge within machine learning. The goal of this project is to investigate alternative techniques for evaluating a machine learning model's expected generalization. This project is inspired by the observation that neural activation similarity corresponds to instance similarity. Theoretically, a machine learning model that mirrored this observation would indicate a consistent understanding of stimuli, which extrapolate to unseen stimuli or stimuli variations. This project utilizes representational dissimilarity matrices (RDMs), which represent a model as it's activation similarity between pairs of responses to stimuli. The project experiments with a multitude of techniques to construct and utilize RDMs in and across model training, validation, and testing.

A Neurobiological Cross-domain Evaluation Metric for Predictive Coding Networks, Nathaniel Blanchard, Jeffery Kinnison, Brandon RichardWebster, Pouya Bashivan, Walter J. Scheirer, May 2018: [arxiv] [pdf]