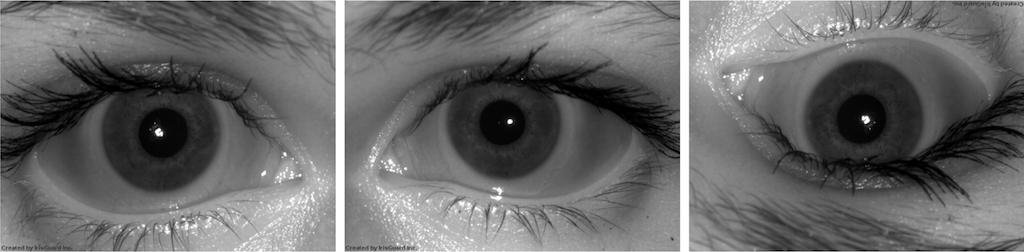

Automatic Classification of Iris Images (ACII)

The purpose of the ACII Project was to develop algorithms and software tool to label the orientation of an iris image. The input is an iris image acquired using commercial iris recognition sensors. The output is a labeling of the left-right orientation and the up-down orientation of the image. The methods include both hand-crafted features and SVM classification, as well as deep-learning-based solutions. The accuracy of the labeling was estimated using existing iris image datasets previously acquired at the University of Notre Dame. The SVM-based approach achieved an average correct classification rate above 95% (89%) for recognition of left-right (up-down) orientation when tested on subject-disjoint data and camera-disjoint data, and 99% (97%) if the images were acquired by the same sensor. The deep-learning -based approach performed better for same-sensor experiments and presented slightly worse generalization capabilities to unknown sensors when compared with the SVM.

Kevin Bowyer, Patrick Flynn, Rosaura G. VidalMata, Adam Czajka